Deep learning teaches computers to do what humans can do - learning by example. It's the driving factor behind things like self-driving cars, allowing them to distinguish between pedestrians and other objects on the road.

Computer models learn to classify tasks directly from sound, text, or images with extreme accuracy. Deep-learning models are trained with a large set of labeled data and neural network architectures with multiple layers.

In this guide, we’ll cover:

- How does deep learning work?

- Creating and training deep learning models

- A brief history of deep learning

- Deep learning vs machine learning

- Deep learning applications

How does deep learning work?

Deep-learning neural networks try to copy how the human brain works by combining weights, data inputs, and bias. Working together, these three components can recognize, classify, and describe objects within data.

These networks have multiple layers of interconnected nodes, which build upon the previous layer to optimize and refine a prediction or categorization. This is called forward propagation. Both the input and output layers are called visible layers, in which the input layer is where the model ingests data for processing and the output layer is where the final classification or prediction is made.

Backpropagation utilizes algorithms to determine errors in predictions. It then adjusts the weights and biases of a function by moving backward through the layers so that the model can be trained. When propagation and backpropagation are combined, neural networks can make predictions and correct errors, becoming more accurate over time. Different types of neural networks are needed for specific problems, such as:

Recurrent neural networks (RNNs)

Utilized in speech recognition and natural language applications, due to leveraging times series or sequential data.

Convolutional neural networks (CNNs)

Mainly used in image classification and computer vision, CNNs can detect features and patterns in images.

Creating and training deep learning models

There are three different ways of utilizing deep learning in object classification:

1. Training from scratch

You’ll need to gather a large labeled data set and create a network architecture that learns from its features and model. This isn’t a common approach, however, as it can take days or weeks to train a network.

2. Transfer learning

This involves the fine-tuning of a pre-trained model, by starting with an existing network like GoogLeNet, and feeding it new data consisting of unknown classes. The network will need a few changes before being able to perform new tasks, but it has the benefit of requiring fewer data (thousands of images instead of millions), making computation faster.

3. Feature extraction

Less common, feature extraction is a more specialized approach. Using the network as a feature extractor, all layers receive the task of pulling features out of the network during training. The features can then serve as input in machine learning models.

GPU acceleration makes a significant change in processing speed, reducing the time needed for training networks.

Deep learning vs machine learning

Deep learning is a subfield of machine learning. A machine learning workflow will first manually extract features from images. These are then used to create the model that will categorize objects in an image.

In deep learning workflows, however, features are extracted automatically from images, with ‘end-to-end learning’ - networks receive raw data and tasks like classification and automatically learn how to do it.

Deep learning algorithms also scale with data, unlike shallow learning which converges. As data increases in size, so does the accuracy of the algorithms. These will often need GPUs to quickly process data.

Machine learning makes more sense when there is unlabeled data and no high-performing GPU. As deep learning tends to be more complex, it’ll need a high volume of images for accurate results, and a high-performing GPU will provide faster data analysis.

What are the main applications of deep learning?

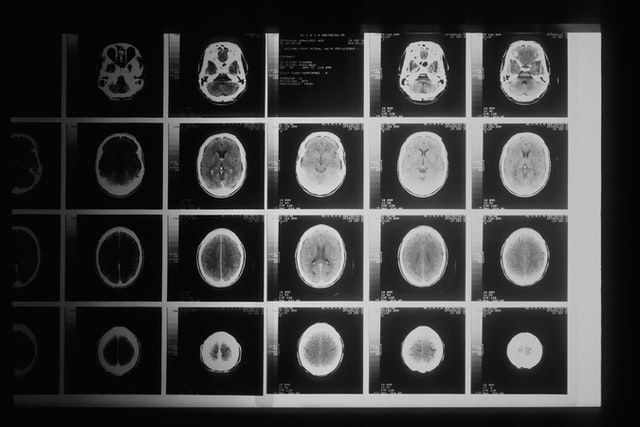

Healthcare

In healthcare, deep learning is helping medical experts to analyze and assess a larger amount of images in less time. This is increasingly important due to the growing digitization of hospital records and images.

Financial services

Predictive analytics is the force behind the algorithmic trading of stocks, fraud detection, business risk assessment for loan approvals, and credit management.

Law enforcement

By analyzing and learning from transactional data, deep learning algorithms can identify dangerous patterns that indicate potential criminal or fraudulent activity.

With computer vision, speech recognition, and other applications, it improves both the effectiveness and efficiency of investigative analysis. It extracts evidence and patterns from images, sound recordings, and documents, which allows law enforcement to analyze large amounts of data faster and more accurately.

Customer service

Simple chatbots make use of visual recognition and natural language. More complex chatbots, through learning, try to understand if ambiguous questions have multiple answers. Depending on the responses customers provide, chatbots will then attempt to answer questions directly or direct the conversation to human employees.

Aerospace and defense

In aerospace and defense, deep learning is utilized to identify objects from satellites, the latter which locate areas of interest and safe and unsafe zones for troops.

Electronics

Automated hearing and speech translation are being developed thanks to deep learning, with home assistant devices responding to voice commands and knowing user preferences.

Industrial automation

With deep learning, it’s possible to improve worker safety around heavy machinery by automating the detection of people or objects within unsafe distances of machinery.

Automated driving

With self-driving cars, like Tesla, for example, deep learning algorithms help to automatically detect objects and pedestrians, which helps to minimize accidents.

A brief history of deep learning

1943: Walter Pitts and Warren McCulloch write, A Logical Calculus of the Ideas Immanent in Nervous Activity, a paper that shows the mathematical model of a biological neuron. Although it has no learning mechanism and a very limited capability, the McCulloch Pitts Neuron paves the way for artificial neural networks and deep learning.

1957: ‘The Perceptron’, created by Frank Rosenblatt and included in his paper The Peceptron: A Perceiving and Recognizing Automaton, has true learning capabilities and can do binary classification on its own. It inspires a revolution in the research of shallow neural networks.

1960: In his paper, Gradient Theory of Optimal Flight Paths, Henry J. Kelley shows the first version of a continuous backpropagation model.

1962: In his paper, The Numerical Solution of Variational Problems, Stuart Dreyfus shows a backpropagation model using a simple derivative chain rule and not dynamic programming, the latter which was used in backpropagation models until then.

1965: Alexey Grigoryevich Ivakhnenko and Valentin Grigorʹevich Lapa create a hierarchical representation of a neural network that utilizes a polynomial activation function and is trained by utilizing Group Method Data Handling (GMDH). It’s presently considered to be the first multi-layer perceptron, with Ivakhnenko often seen as the father of deep learning.

1971: Alexey Grigoryevich Ivakhnenko creates an 8-layer deep neural network by utilizing the GMDH.

1980: The first convolutional neural network (CNN) architecture is developed by Kunihiko Fukushima. It’s capable of recognizing visual patterns like handwritten characters.

1986: NeTalk is invented by Terry Sejnowski. It’s a neural network that learns to pronounce English text that is shown as text input, and by matching phonetic transcriptions to compare. In the paper, Learning Representations by Back-Propagating Errors, Geoffrey Hinton, Rumelhart, and Williams showcase the successful implementation of backpropagation in the neural network. This paved the way for training complex deep neural networks. Paul Smolensky invents a variation of the Boltzmann Machine, the Restricted Boltzmann Machine, which becomes popular for building recommender systems.

1989: By using backpropagation, Yann LeCun trains a convolutional neural network that is able to recognize handwritten digits, paving the way for modern computer vision.

1997: Sepp Hochreiter and Jürgen Schmidhuber publish the paper, Long Short-Term Memory, which is a type of recurrent neural network architecture that revolutionized deep learning for the following decades.

2006: The paper, A Fast Learning Algorithm for Deep Belief Nets, by Geoffrey Hinton, Ruslan Salakhutdinov, Osindero, and Teh, outlines how the authors stacked multiple RBMs in layers and called them ‘Deep Belief Networks’ - a more efficient process for large amounts of data.

2008: Andrew NG’s group at Stanford advocates for the usage of graphic processing units (GPUs) in training deep neural networks to speed up training time by many folds.

2009: ImageNet by Fei-Fei Li, a professor at Stanford, is a database containing 14 million labeled images - an important benchmark for deep learning researchers.

2014: GAN, or Generative Adversarial Neural Network, is created by Ian Goodfellow. It paves the way for new applications of deep learning in science, art, and fashion because of its ability to synthesize real-like data.

2019: Yoshua Bengio, Geoffrey Hinton, and Yann LeCun win the Turing Award due to their enormous advancement contributions in deep learning and artificial intelligence.

For more resources like this, AIGENTS have created 'Roadmaps' - clickable charts showing you the subjects you should study and the technologies that you would want to adopt to become a Data Scientist or Machine Learning Engineer.

Follow us on LinkedIn

Follow us on LinkedIn