Few technologies have made such rapid developments in recent years as deep learning. In this article, we’re going to be looking at four applications for deep learning in the visual realm that you can use right now to leverage its stunning capabilities.

How is deep learning being used in computer vision?

1. Image upscaling

We’re going to start simple. However, this is probably the most deceptively complex item on this list. For years, the idea of upscaling an image while maintaining its quality was the stuff of science fiction (or lazily written episodes of procedural TV crime dramas). But it seems like those shows might have been onto something, after all. The software that exploits this form of deep learning is incredibly easy to use, and it’s free!

Indeed, all you need to do is find an image you want to upscale, and then it’s just a matter of letting the deep-learning algorithm do its thing. Many sites offer this service, but three sites I’d recommend are waifu2x, icons8.com, and deep-image.ai. There are also paid services such as Gigapixel AI from Topaz labs. Gigapixel AI is extremely capable and robust, and you can try it for free to see how you think it stacks up against its free competitors. Honestly, though, this is one of those things that is best seen to be believed, because words alone don’t quite do the results justice.

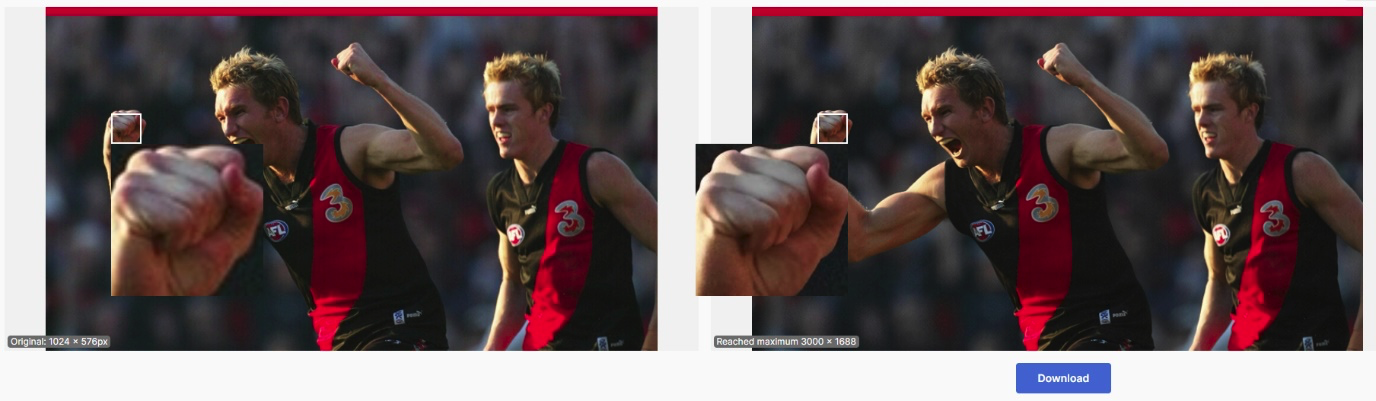

Below are two photos of former AFL footballer James Hird. On the left is the original low-res image, while on the right is the beefed-up upscaled image, which has a new resolution with about 8.6x the number of pixels. The right image uses the AI upscaler from icons8.com. In each photo, a white square allows you to easily look and compare both images. But as you know, pixels aren’t everything. If you look at the blown-up image of Hird’s right fist, you’ll notice how much clearer the right image is (and it’s far from subtle!).

I should point out, however, that the latter two websites have a limited amount of free conversions, whereas waifu2x has no such limitations (although it has an annoying captcha for each image to mitigate bot spam from clogging their servers). It is honestly difficult to say which AI upscaler is the best, and I suspect that the AI upscaler that does best is very dependent on the image you choose to use. If you do want to have a go yourself, though, I strongly advise trying a plethora of images on a variety of image upscalers.

While AI upscalers may seem like magic to most (myself included), they have their limitations and aren’t infallible. For example, an AI upscaler will probably have a hard time turning undecipherable text into something readable, but I suppose it wouldn’t hurt to try. A good upscaler is also great at wiping out pixelated edges and other graphical quirks. The best thing to do is to experiment and see what kind of results you can achieve.

2. Video upscaling

In light of static image upscaling, video upscaling probably just seems like a natural extension; after all, isn’t video just a series of sequential still frames? Well… yes! But sophisticated algorithms exist that are tailor-made for video. In other words, the acclaimed program Topaz Video Enhance AI factors in multiple sequential frames so it can accurately render a consistent and believable final product. The results, again, truly do speak for themselves. The video is not only upscaled but also denoised, resulting in video that’s differences are night and day apart.

You might be thinking that such powerful software is expensive. Well, it’s not cheap. Expect to shell out $300 for Video Enhance AI. However, you don’t need to fork out 300 big ones to try it out on your old wedding video. You can actually try the software for an entire month with no limitations. In my opinion, that is a fantastic deal.

I’ve personally used the software to update the fidelity of my favourite band’s music videos, and the results are truly impressive. There are some artifacts in certain circumstances (depending on the situation), but on the whole you probably won’t have any issues. For instance, the algorithm seems to have a little issue with eyes and faces on low-fidelity video, but these issues can be mitigated by blending in the original video (which is what I have done for the few cases that were a problem during my remastering experiment).

One other issue is the time taken to upscale video. It used to be really quite long to upscale even shorter videos (such as the aforementioned music videos), so I would leave the program to run overnight to convert 480p video to 1080p, and it would usually take the full 8 hours. That’s all well and good for short videos, but converting even a short 22-minute episode of TV would therefore take days. If you are using your computer for high-end processing, slowdown could be an issue while running the conversion.

Topaz has addressed this, though, with videos seemingly processing roughly 4 times quicker than older version I’ve run. Still, if you wanted to upscale an entire TV series or movie, just know that it won’t be a quick process (depending on your hardware). Speaking of which, I have seen quite a few people already upload upscaled versions of their favourite TV shows, and the results have been quite great.

But it’s not just old DVD and VHS transfers that are getting the AI love; even videos with crisp 1080p sources are getting 4k upscales where they don’t already exist, creating results that are close to the real thing. I’d definitely recommend trying the software out; you’ve got nothing to lose.

3. Depth-Aware Video Frame Interpolation

As far as AI is concerned, I’d say the amount of controversy this technology has garnered is runner-up only to deepfake technology (but more on that later). Depth-Aware Video Frame Interpolation, also known as DAIN, is software that “aims to synthesize non-existent frames in-between the original frames”.

There are two apps that can perform this task for you. The original is Dain-App, which used to be free but now costs $10; the other is Flowframes, which does exactly the same thing but doesn’t cost you a cent. The latter app also has the benefit of being able to run on any modern GPU, whereas Dain is limited to specific kinds of cards from Nvidia. Furthermore, Flowframes also has the Dain algorithm baked in as an optional extra (in addition to the RIFE algorithm that comes with Flowframes). In the immortal words of Borat, “Very nice.”

But how is this software useful? Well, it’s actually useful in a surprising number of ways.

The biggest ways, though, are as follows:

- Creating clean slow-motion video from native footage

- Giving a higher frame rate to existing videos, including footage from sport, video games, and TV/movies (especially animation)

You might have been wondering earlier what I meant by “controversial”. What could possibly be controversial about adding more frames into video? Well, the controversy stems particularly from its use in animation. There seems to be universal agreement about its utility for creating slow-motion footage out of sport highlights (such as this epic slow-motion footage I was able to get from former AFL player Gary Moorcroft). Here’s another example (although it’s more comical than anything else). What is controversial, however, is its use in animation. Technically, the effect it gives is incredible, but the main complaint is a twofold one.

Let’s look at the most infamous example—and I really mean infamous. The video is titled “What Mulan (1998) would look like in 60fps” and has racked up a mind-boggling 6 million views since it was uploaded in 2020. It shows several clips from the original Mulan movie from 1998. On first glance, the result looks spectacular. Now, call me a heathen, but I prefer the boosted frame rate. Perhaps you do, too. Maybe you hate it. In any case, I haven’t seen anything polarize people this badly since pineapple on pizza (which is just terrible, by the way).

“What’s the big deal?” I hear you say. Well, to be honest, I had no idea it was controversial until I saw a video by an animation channel I subscribe to on YouTube. While this isn’t intended to be a treatise on the topic, I really just want to mention it for the sake of writing a fair and balanced article. After all, the point of this article is not necessarily to advocate any software but rather explain the technology and its use cases.

The two major criticism that I took from the video are as follows:

a) It wasn’t the animator’s intent

Now, I appreciate where he’s coming from on this. The videos with improved frame rates aren’t intended to usurp the original videos. In fact, I would be chagrined if studios started forcing increased frame rates upon their audience in the same way that I was annoyed when non-widescreen episodes from The Simpsons golden age were zoomed in to be widescreen, effectively lowering the fidelity as well as cropping out important visual scenery that in some cases cut out jokes. If you’re curious, check out this article by Vulture that correctly excoriates the corporate clowns that were responsible.

This being said, I don’t really feel this takes away from the original movie in the same way that the aforementioned Simpsons examples show. For example, I’m sure James Cameron preferred that everyone watched Avatar in IMAX 4K Laser Digital, but the reality is that you need to accept that there is no uniformity when it comes to how a movie is screened. While I confess the frame rate is a substantial difference, I think the differences, on balance, are an improvement (and I know I will get flak just for saying that). Like I said, you are not obligated to watch Mulan in 60 fps. It was, after all, just an experiment. As far as I can tell, no version of the movie exists in 60 fps anyway, so you can put your pitchforks away.

b) The animation looks weird or has artefacts due to technical limitations

Before you defenestrate me for my feelings on 60 fps Mulan, I will happily concede the point here. While the aforementioned Mulan example seems to use clips that were conducive to AI interpolation, I must confess that I have seen interpolation of animation that causes weird blurs and other artifacts. This is because the AI isn’t interpreting the animation in the same way a human does. It does have some impressive depth awareness (which makes this possible in the first place), but it’s not a silver-bullet solution.

4. Deepfakes

Ah, yes, deepfakes. Unless you’ve been living under a Faraday cage for the past few years, you’ve no doubt heard about deepfakes. I mentioned that Depth-Aware Video Frame Interpolation was second only to deepfakes in terms of controversy, and it’s easy to see why. Using deep learning, you can use a training model to digitally superimpose people over the original actor.

The technology can be relatively harmless, such as making Nic Cage play both Superman and Lois Lane (if you can actually consider that harmless), or it can be truly horrifying. For instance, you could superimpose celebrities (or even non-celebrities) into porn videos without their express consent.

While the effect is still not quite perfect yet, one would need to have an extremely good eye to differentiate a well-put-together deepfake from the real thing, which has implications that go well beyond the aforementioned issues, including making political figures (or any other person, for that matter) appear to be doing or saying things they never said did or said in reality, which means it is becoming increasingly difficult to accept video and audio as reality, something which had previously been a taken for granted (especially in the case of video).

As I alluded to, the technology exists for both video and audio, making this technology extremely powerful and dangerous in the wrong hands. But even a good impersonator, such as Jordan Peele in this impression of President Barack Obama, proves that we need to take the threat of deepfake disinformation extremely seriously.

Follow us on LinkedIn

Follow us on LinkedIn